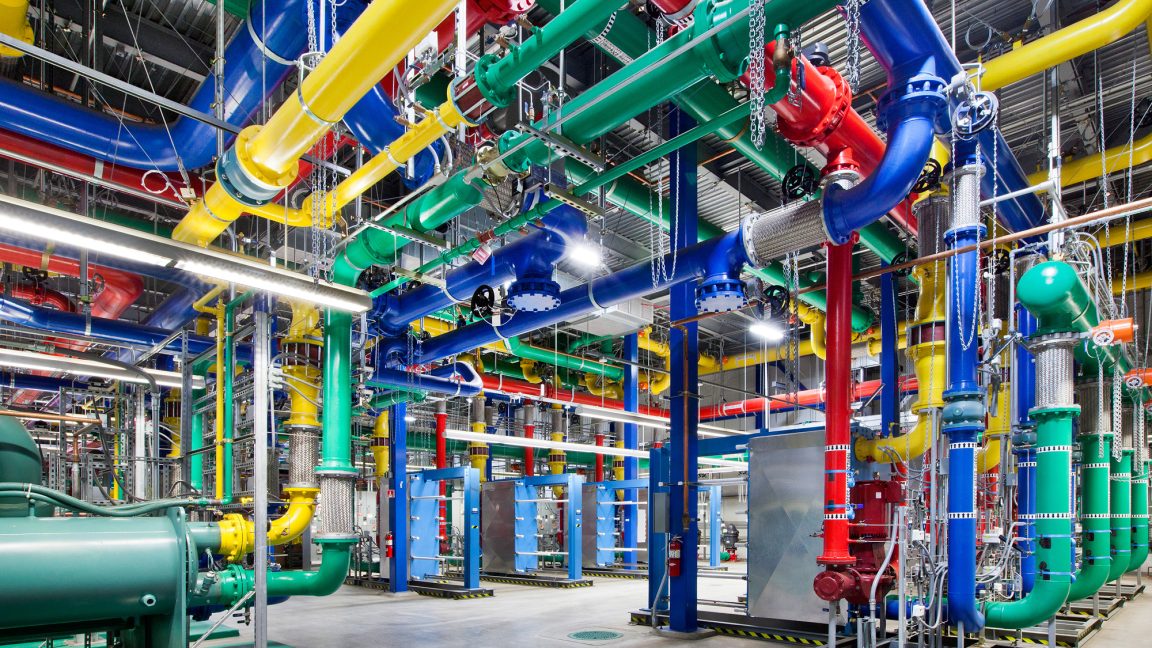

Google’s AI infrastructure chief Amin Vahdat told an all-hands meeting that the company must double its serving capacity every six months to meet rising AI demand, aiming for roughly a 1000x increase over the next 4–5 years while keeping costs and energy use at roughly today’s levels.

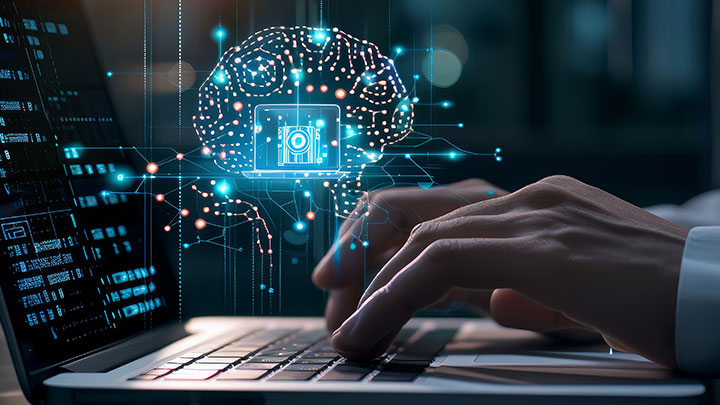

The plan emphasizes more than money: it hinges on collaboration across teams and co-designing hardware and software to achieve higher reliability, performance, and scalability without a proportional rise in power consumption.

OpenAI and other rivals are pursuing substantial data-center expansion as part of a broader race to supply AI compute. OpenAI’s Stargate partnership with SoftBank and Oracle is reported to involve six US data centers and investments that could run into the hundreds of billions, with the aim of pushing capacity toward multi-gigawatt scale and supporting hundreds of millions of users.

Google’s leaders have signaled that much of the demand debate centers on how much is organic user interest versus AI features integrated into core products like Search, Gmail, and Workspace—unclear whether the boost in usage is voluntary or feature-driven.

Beyond raw spending, the executive stressed that the objective is to build infrastructure that is not only larger but also more reliable and scalable than anything available elsewhere, underscoring that the AI infrastructure race remains the most expensive and strategic battleground in the industry.

![[AS2716] Universidade Federal do Rio Grande do Sul](https://r2.isp.tools/images/asn/2716/logo/image_100px.png)

![[AS52888] Universidade Federal de São Carlos (4 probes)](https://r2.isp.tools/images/asn/52888/logo/image_100px.png)